-

Looking For Aliens

Recently I’ve been helping the Berkeley SETI project with their data processing pipeline and learning a lot about SETI and radio astronomy in the process. I thought I’d write about how it works because I think it’s really interesting. This blog post will assume you don’t know anything about astronomy so apologies if you are such a savvy astronomer that this bores you.

Types Of Telescope

The normal sort of telescope, the sort you might look through to see the Moon, that is an optical telescope. Optical telescopes make it easier to see distant light. This is an optical telescope that you can buy for $100 at Amazon:

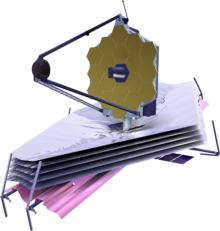

And this is an optical telescope that cost $10 billion or so:

Well, maybe it isn’t precisely correct to call the James Webb Space Telescope an optical telescope. It’s like a D&D elf, it can see regular light and also infrared. But infrared is right next to regular light, so it’s at least very close to an optical telescope. The Hubble saw visible light but also some ultraviolet and infrared. In general these space telescopes are doing similar things to your backyard telescope, they are just much better at it.

There is also a totally different sort of telescope called a radio telescope. A radio telescope is what James Bond fought on top of in GoldenEye. This is a radio telescope:

In particular this is the Green Bank Telescope, known to its friends as the GBT. It’s in the middle of nowhere in the Allegheny mountains in West Virginia. It doesn’t sense light, it senses radio waves. In general these radio telescopes are doing similar things to the radio receiver in your car radio, they are just much better at it.

For physics reasons, optical telescopes get a lot of interference from the sky and the atmosphere. So when you want an optical telescope to analyze space really well, you get it up into space. Radio telescopes, on the other hand, get a lot of interference from radio waves. So the area around the Green Bank Telescope is blocked off as the National Radio Quiet Zone. They restrict radio stations and they try to get people to not run microwaves within 20 miles of the telescope.

I’ve mostly been dealing with data from the Green Bank Telescope, so I’ll focus on the data it provides in this post. (Green Bank is a single dish telescope, which means it has one big dish. You can do weirder things like beamforming with the arrays of lots of smaller dishes.)

The Output of a Radio Telescope

You can think of a normal video feed as containing four-dimensional data. The x dimension, the y dimension, the time dimension, and the color dimension, and you get a “brightness” for each point defined in those four dimensions. Most image processing you just think of the color as one entity, and you happen to store that as an RGB triplet, but you can also think of it as your camera just happens to only provide three pixels of resolution in the color dimension because most human eyes can’t distinguish finer detail in that dimension anyway.

So what sort of data do you get from a radio telescope? The GBT typically is using a “single pixel” detector. That means you don’t get an x dimension or a y dimension. It’s just looking at one single spot in the sky. But you get a ton of resolution in the time dimension and the color dimension. When it’s a radio wave, we don’t call it “color”, we call it “frequency”, but it’s the same underlying physical thing. So the data is two-dimensional, time and frequency.

There is a set of people based in West Virginia who operate the telescope, and then different research groups from around the world rent it for blocks of time. There’s a relatively small datacenter on-site and the different research groups run their own computer systems in that datacenter. The GBT is a pretty good telescope so it outputs a lot of data. The precise details depend on the configuration, but the typical raw output of a day’s session might be on the order of hundreds of terabytes. So with one of the larger data consumers like the Berkeley SETI program you have nontrivial issues just around the amount of data you are processing.

Doppler Drift

The fundamental idea behind this sort of SETI is, maybe aliens are emitting radio signals, so let’s look for radio signals coming from somewhere outside our solar system.

It’s harder than you might expect to tell where a radio signal is coming from. In particular you need to distinguish a radio signal that’s coming from aliens in outer space, and a radio signal that’s coming from radio interference on Earth.

One way you can tell if a signal is coming from outer space is to move the antenna around. Point at star A for a while, then point at star B for a while, then point back at A for a while, et cetera. A signal that shows up when you are pointed both at star A and at star B is probably just interference.

Another way is to use the Doppler effect. You probably learned about this in a physics class. The Doppler effect is when a fire truck is driving toward you, its siren sounds different than when a fire truck is driving away from you. The pitch of a sound is different when its source is moving relative to you. For a radio wave, it doesn’t have a pitch, it has a frequency. The frequency of a radio wave is different when a source is moving relative to you.

By itself, that doesn’t tell us anything. If you only hear a one-second recording of a fire truck, you can’t tell whether it was moving toward you or away from you, because you don’t know what the “natural pitch” of that fire truck is.

However, if a source is accelerating relative to you, you can detect that without knowing the natural frequency. When a source is accelerating, the frequency will seem to change steadily over time. This is called “Doppler drift”.

Fortunately, basically everything in outer space is accelerating relative to you, because as the Earth rotates, you’re accelerating towards the center of the Earth. So for a source in outer space, you should see some Doppler drift. The magnitude of the drift depends on whether the object is just cruising along, or whether it’s on the surface of its own planet, or orbiting around something.

Examples

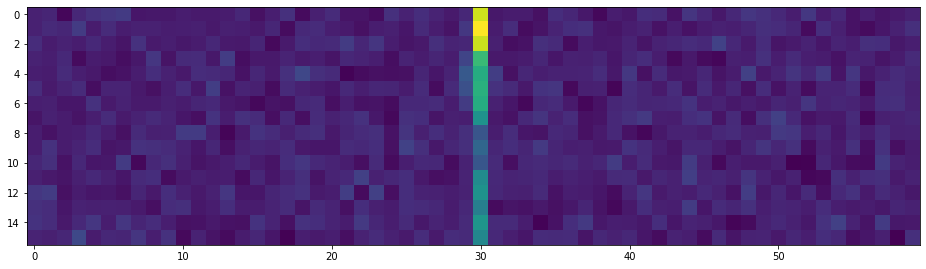

To me, this makes more sense when I look at the data. Here’s a recording that shows no Doppler drift, so it’s probably some sort of terrestrial interference:

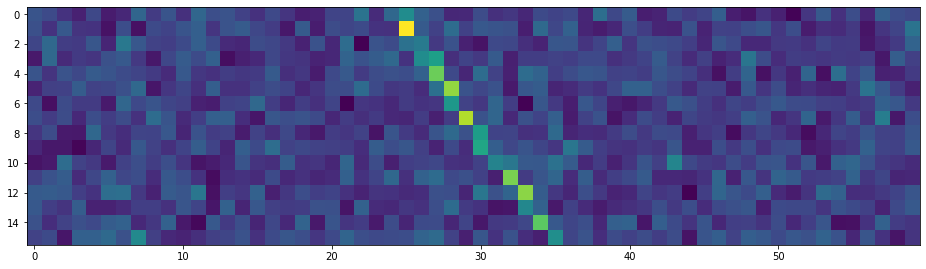

And here’s a recording that shows Doppler drift. I don’t know what it is - probably still some sort of interference rather than aliens - but it’s coming from some source that’s accelerating relative to the receiver, which filters out the vast majority of interference.

This data is heavily compressed from the original form that the telescope receives. The vertical axes on these plots represent about five minutes of recording. The horizontal axes are frequency at a very fine resolution - each pixel represents a fractional change in the frequency of about 3e-10. So imagine if your normal radio could pick up 10 million different radio stations between 101.1 and 101.3.

Conclusion

So the SETI data pipeline is basically, collect lots of data pointing at different things. Find cases where you see a diagonal line when you point at a particular target, and no diagonal line when you point away from that target. When you see something, notify the humans to check it out.

I have theories about how well this is working, the parts that could be improved, why we should build a moon base, all sorts of ideas. But this post is getting long so I think I’ll call it here. Let me know if you have any questions - I think the process of explaining it helps me understand things better myself.

-

r/antiwork and The Gap

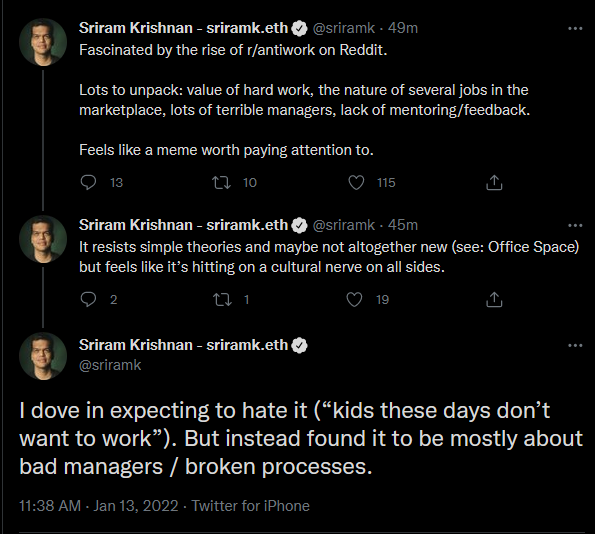

Recently the Antiwork subreddit has gotten really popular. I was interested by Sriram’s analysis:

and the subsequent response on r/antiwork.

I feel like “bad managers and broken processes” isn’t quite what’s going on here. That does come up a lot in the top stories. Someone gets fired for offending one ridiculous customer, someone gets a $40 tip and then their boss takes it away, managers do mean things to try to convince people to stay at their job after they quit. The absolute worst stories, those often have bad managers and broken processes. But the core problem is that many jobs are just fundamentally miserable, too miserable for good managers or good processes to fix.

After I graduated college and before I started grad school, I spent the summer in Boston living with a friend, and I was looking for a summer job just to have something to do. It was harder than I expected to find something; I couldn’t find a job that was happy to take someone for just a few months. I ended up lying on my resume and saying I was an artist who dropped out of college. Finally I got a job at The Gap.

Working at The Gap, the processes seemed fine, and the managers were very kind and helpful people. It’s not a hard job. You basically just stand around, fold clothes all day, and watch the other employees to make sure nobody steals anything. But, I think if I worked there my entire life I would hate capitalism and become a huge antiwork supporter.

The Gap is not great

There are three main problems with the job. One problem is that it was extremely boring. Another problem is you made about $7 an hour. At 40 hours a week that’s somewhat over poverty level. But the third problem is that you can’t even get 40 hours a week. There’s no commitment to how many hours a week of work you can get. I think this was fundamental to the nature of the business - the management had these projections of expected foot traffic based on seasonal projections, past holiday performance, new promotions, and it varied a lot. I don’t really think better processes would change this. As long as The Gap is allowed to hire part timers with shifts chosen at a moment’s notice, they will choose to do so.

There were two types of worker at The Gap. The “transients”, like me, were just working a minimum wage job for a little while and not too concerned about making the maximum amount of money we could. The “lifers” were older and had responsibilities and tended to grab as many shifts as they could. You couldn’t survive as a lifer unless you had a second job. But the stress of two part time jobs spreads out far beyond your work hours. Every weekday, every weekend day, you’re hoping for one of the two to come through, and if you really need the money, you can’t plan anything in advance.

Can good managers or good processes do anything here? The job just sucks and it always will suck. At least nowadays if you do gig economy work for Uber or DoorDash you can choose when your part-time work is. That seems way better for second jobs than trying to get compatible shifts from two bosses.

Learning things

That summer I learned so much about folding clothes. I was really, really good at folding clothes, just one second and it’s snapped into a crisp rectangle, and then a year later I forgot all my skills and nowadays the quality of my folding is quite unimpressive. More importantantly it showed me how terrible some jobs are and about how grateful I should be to not be stuck there.

The antiwork movement makes a lot of sense for people who are stuck in a bad job. It isn’t “solution-based” like people advocating communism or a universal basic income. But, maybe that’s a good thing, because neither communism nor a universal basic income is a great solution. The antiwork community is a place for sharing how unhappy people are with their jobs. It’s growing, waiting for a better solution to arise.

At the end of the summer I went to pick up my last paycheck on the way to the airport. I couldn’t just say, hey I had a grad school fellowship lined up in California this whole time, bye! So I made up a story about how my cousin got me a job working at a casino so I was moving to Vegas, and as I left a coworker ran after me and was like… “Can your cousin get me a job too? Please, help, you have to at least try!”

I said I would try and then never got back to him. I hope he found something better.

-

Infrastructure: Growth vs Wealth

I’m a big fan of Tyler Cowen’s book Stubborn Attachments and the philosophy that he outlines there. One of the core principles is that economic growth is more important than any random good thing for a society to have, because in the long run a richer society will be able to afford more of the good things, and economic growth compounds, so by optimizing for economic growth you will perform better in the long run anyway. I basically agree with this and only want to ponder about tweaking little details around the edge.

Wealth Plus

Clearly you don’t just want to optimize for plain GDP. There are other things that are valuable. In Stubborn Attachments Cowen’s term for this is “Wealth Plus” - you don’t just count money in the bank, you imagine adding in some term for free time, happy personal relationships, the other good things in life, et cetera.

I think there is another dimension that matters, though. Some economic activity is an end in and of itself. You pay someone to give you a massage. They give you a massage. It feels nice. The end. Versus, some economic activity builds on itself. It creates something either directly or as a byproduct that will make more economic activity possible. Kind of like “infrastructure”. You pay someone to build a bridge, now you have a valuable bridge, but also the existence of this bridge makes more new things possible, even if you charge for the use of the bridge.

Imagine two economies. In economy A the “end goal” economy grows 2% a year and the “infrastructure” economy grows 1% a year. In economy B it’s reversed. You won’t notice immediately, but before long won’t new innovation become fundamentally more possible in economy B?

I feel like normal economists should be analyzing things this way but I can’t really find anything along these lines.

What Counts as “Infrastructure”?

Last summer there were lots of arguments over what counted as infrastructure because there was some bill funding infrastructure and people were fighting about what should go into it. This is related in spirit - what parts of the economy are worth extra investment in? Although, really I don’t want to try to philosophically influence politics. Just too zero-sum. I am interested in this question more because it affects what I would like to be working on, to invest in, or to encourage my kids to get into.

According to my system I am thinking about here, the “infrastructure” parts of the economy are the ones that help other parts of the economy when they get better. Video games are not infrastructure. Cloud services are infrastructure. Those ones are easy.

Recently China has been trying to steer their economy in more particular ways. Read Dan Wang’s 2021 letter if you haven’t. Are they doing it in the right way? In general, Beijing seems to be anti “consumer internet”. But I think a lot of the consumer internet actually should count as infrastructure.

For example, food delivery. To me, food delivery apps seem like great infrastructure. It just saves you time when you can easily get food delivered, instead of picking it up yourself or using some slower, more inefficient delivery method. Anything that saves people time seems like infrastructure - those people will then be able to spend more time doing other things. Like work more. So I think food delivery should count as infrastructure. And personally grocery delivery is one of my favorite technological advances of the past decade.

The same goes for child care. Child care is pretty clearly infrastructure by this definition because it helps people work. It’s easier to get workers if it’s easy for your workers to get child care.

Tougher Questions

Education is an interesting question. I’m not sure what to think of the Chinese crackdown on online education. In theory, education should be infrastructure. When people learn more and become more intelligent, it helps out all sorts of sectors of the economy that depend on intelligent workers. But, maybe in practice some sorts of online education are like a pure game to get a credential, rather than learning something useful.

Is social media infrastructure? It sort of lets you do something, but it isn’t really helping you work. It’s closer to being an addictive waste of time. It’s kind of insufficient to only think of it from an individual’s point of view, though. From an advertiser’s point of view, targeted ads are great infrastructure. They help a lot of businesses get going. It’s tricky, though, because I think the sort of businesses that are most helped by targeted ads are the sort of consumerist businesses that are “not infrastructure” themselves. Like ads for games, clothes, any sort of random item that you consume yourself. This almost hints at a second category - the sort of infrastructure that helps other infrastructure. Shouldn’t that be a better sort of infrastructure to invest in? Yeah, this line of reasoning both makes sense and kind of reminds me of eigenvalues. There should be some “first eigenvector” that defines the optimal type of infrastructure investment. Maybe there’s some normality condition that our economy doesn’t obey, though…

Is cryptocurrency infrastructure? I was excited about working on cryptocurrency application platform stuff, because it seems like infrastructure. Some of the modern trendy crypto things, like NFTs, seem like they are not infrastructure, and I am less into them. A work of art is not infrastructure, blockchain or no blockchain.

Are hedge funds infrastructure? My instinct is no but it probably depends on what exactly they are doing. There are certainly some financial services which make other businesses possible. And there are certainly some financial entities which are just making money via shuffling stuff around cleverly and nothing else really depends on them.

Conclusion

I think people should be proud to work on “infrastructure”. It is underrated because the creators don’t capture all of its indirect benefits.

-

Resolutions for 2022

Why make New Year’s resolutions? It seems like a cliché that people don’t follow through on them. I think I can do it, though. Let’s jump in.

1. Calorie Counting

I’ve tried a few different diet strategies in the past. I have never really done the mundane thing of counting calories and trying to stick to a certain limit, though. The most success I have had is from doing a low-carb diet. The main problem I have with sticking to a low-carb diet is that I end up eating with other people, like my family, and my kids in particular do not want or seem to need anything like a low-carb diet. So it’s both a huge pain and a huge temptation to be making high-carb food and then not eating it.

As far as I can tell, low-carb diets mostly work by annoying you into eating less. The upside is that they are conceptually simpler to stick with than counting calories. When you count calories you have to constantly do a whole lot of arithmetic. However, I feel like of all people I should be okay with constantly doing a whole lot of arithmetic as a way of life. So, let’s give it a try.

When my diet plans fail, it tends to be after a period of some success. At first things are going well, I have a lot of energy to keep it going, then after a while I lose emotional energy and stop wanting to continue. This is sort of what I am worried about with the calorie counting plan. Hence, this blog post about New Year’s resolutions. I hate admitting failure so if I can work up the emotional energy to quasi-publicly commit to something, it’s like transferring energy into the future via a subconscious emotional battery.

So, this resolution is simply to count calories. I’ve been at it for a day and a half or so. Just using the default iOS health app, I tried some more complicated apps but they all seemed optimized for me to quickly enter the wrong data and to encourage me to engage in complicated retention schemes.

2. Astronomy Publication

Well, if you only know me from this blog and don’t talk to me in person this might seem crazy, but this goal for 2022 is to get my work published in some sort of astronomy journal. I’ve been doing some volunteer work with the Berkeley SETI people and some of it seems pretty promising. I should blog more on this later, I suppose, because I think a lot of the details will be interesting to other programmer types.

Basically, modern astronomy is more and more a “big science” thing. At least for radio astronomy, which is the sub-part of astronomy that I’ve been getting into. There are a few enormous radio telescopes, there are a relatively small number of teams that buy time on these telescopes, and the telescopes create a huge amount of data. The analyses that astronomers could do manually 20 years ago, nowadays require large-scale computer analysis.

My rough take on the field is, the astronomers could use a lot more software engineering support. You have ratios like 10 astronomers to 1 software engineer and it seems like they could use a ratio of 1 astronomer to 10 software engineers. So, that means that the marginal value of software engineering contribution is high.

If you are curious, you can check out turboSETI which I have contributed to a bit - it’s currently the gold standard analysis software for looking through the output of a radio telescope and seeing if there are any “alien-looking” signals. And you can also check out Peti which is an alternative way of doing things (tldr more GPU specific) that I have been working on.

Besides those, I’ve also been working on some “data pipeline” type stuff which isn’t public but is much more of a mundane, well every one in a while we get a petabyte of data from this telescope, let’s make sure all the machines do the various processing we want them to do and that data gets compressed and filtered and analyzed and indexed and stored and cross-analyzed.

So most of this work in radio astronomy isn’t like, a single person thinks hard and creates some theory and publishes it. It’s more like, there’s ten authors and everyone is doing some aspect of making this data analysis work. That’s fine. I just want to get my fingers in there. I need a sub-goal that happens before “finding aliens” because that’s too big a goal to just stick in a New Year’s resolution.

3. Exercise

In 2022 my goal is to work out four times a week. This one feels like a gimme, surprisingly enough, because I’ve been doing this pretty successfully in 2021. The key to making it work is that my wife and I do it together at a regular time; we have an extra room in our basement that we have now filled with some weights and some exercise machines and we go work out at the same time so that one of us can’t weak out without admitting we are skipping to the other.

So, I just think it’s okay to have a resolution that is essentially a “maintainance goal” from the previous year. Diet and exercise things don’t just automatically stay in place, often stuff works for a while and then it stops working. So it really is important and nontrivial to keep this up. So I hereby resolve for it.

4. Blogging

Well, I was going to just have three resolutions. Then I was reading about the Law of Fives and thought how cool it would be to have five resolutions. So I thought for a while and I only really came up with a fourth one. So, fine. My goal for 2022 is to blog at least once a week.

Why? I don’t really want to get attention or page views, per se. It’s more that I think the rigor of writing things down forces me to think harder about things. I usually feel good about writing a blog post once I do it, but I just don’t end up doing it.

This resolution, I feel like it’s the most likely one to fail because I just stop caring about it. But, it’s also the easiest one to just phone it in and take some minimum action to technically achieve it. I find that once I start writing a blog post, though, I usually write a decent amount and think a decent amount about it. So let’s give this a try.

Now what?

I was thinking about a resolution about reading a lot of books. But, I just enjoy doing that. I don’t really need to resolve to read books because I will read books anyway.

When I think about a goal-setting process, the first thing that comes to mind is Facebook corporate goal-setting. Which seemed to work pretty well. So maybe at the end of Q1 I will write a little update about how goal-setting is going. Or maybe at the spring equinox, that’s more how the astronomy world paces itself. Nah… Q1 just feels right. Stay tuned!

-

The Random Number Generator Hypothesis

Life In A Simulation

Imagine an alien civilization with such advanced technology, they can construct a computer many orders of magnitude more powerful than ours. It’s powerful enough to simulate our entire universe. 10^10^100 operations is easy, for these alien computers.

One of these aliens sits down one day and starts to code. Before they get to the real interesting programming, they’ll have to import some libraries. File formats, network protocols, secure handshakes, cryptographically secure random number generators.

How do these aliens generate random numbers? Like our random number generators, they develop some basic rules to convert one state of computer memory to another, seed it with something like the current time, and run these rules forward a while. In practice, when a set of rules passes a variety of statistical tests, it is deemed “random enough” for practical uses. Eventually you discard the vast majority of the information, and just extract a few bits to use for your random numbers.

Of course, these alien computers are extremely powerful. So powerful, they can easily spare 10^10^50 bits for a random number generator. And these rules for their random number generator… we call them the “laws of physics”.

Long ago, the aliens running our simulation seeded the Big Bang. They’re going to run our universe for a hundred billion years. At the end of it, they’ll count the number of electrons. If it’s an odd number, the output is a one. If it’s an even number, the output is a zero. And there you go. One random bit! Random bits are useful for practical applications, of course. It’s not like our universe is going to waste. There’s a real meaning to it.

Okay, cool story, so what?

Philosophically, the simulation hypothesis suggests that humans are living in a simulation. There’s a simple argument for it: if a civilization creates simulations, then there are more simulations than “natural” civilizations. Therefore any particular civilization is probably in a simulation.

But this doesn’t tell us anything about the nature of the simulation. Think of a scenario like the one I described above as the “random number generator hypothesis” or “RNG hypothesis” - that humans are living in a simulation. But it’s just a random number generator. The creators of the simulation don’t care about us at all. They will never intervene. And there’s no way for us to interact with the outside of the simulation.

I claim that the RNG hypothesis is more likely than a simulation in which the simulators care about any intelligent life in the universe. The argument is simple. Lots of programs need random number generators! But it’s fairly rare to write a simulation that is aimed to simulate some sort of being where you inspect its behavior. If the aliens’ programming behavior is remotely like our own, we are far more likely to be in a random number generator than in the sort of simulation where the simulators are paying any attention.

Does this matter in any way? If the RNG hypothesis is true, it is indistinguishable from a world in which we are not in a simulation, where the laws of physics simply exist and always have existed. So, in a sense the hypothesis is scientifically meaningless.

And that’s my point, really. Whether we are in a simulation or not, it doesn’t mean anything. If we could communicate with the aliens that created our universe, yeah that would mean something. If we could discover useful things about the laws of physics by analogy to the rules of a simulation, yeah that would mean something. But just being in a simulation, by itself, is meaningless. Don’t lose any sleep over it.