-

Metamagical Themas, 40 Years Later

It is completely unfair to criticize writing about technology and artificial intelligence 40 years after it’s written. Metamagical Themas is a really interesting book, a really good, intelligent, and prescient book, and I want to encourage you to read it. So don’t take any of this as “criticism” of the author. Of course, after 40 years have passed, we have an unfair advantage, seeing which predictions panned out, and what developments were easy to overlook in an era before the internet.

Instead of a “book”, perhaps I should call it a really interesting collection of essays written by Douglas Hofstadter for Scientific American in the early 1980’s. Each essay is followed by Hofstadter’s commentary written a year or two after. So you get some after-the-fact summation, but the whole thing was all written in the same era. This structure makes it really easy to read - you can pick it up and read a bit and it’s self-contained. Which is yet another reason you should pick this book up and give it a read.

There are all sorts of topics so I will just discuss them in random order.

Self-Referential Sentences

This is just really fun. I feel like cheating, quoting these, because I really just want you the reader to enjoy these sentences.

It is difficult to translate this sentence into French.

In order to make sense of “this sentence”, you will have to ignore the quotes in “it”.

Let us make a new convention: that anything enclosed in triple quotes - for example, ‘'’No, I have decided to change my mind; when the triple quotes close, just skip directly to the period and ignore everything up to it’’’ - is not even to obe read (much less paid attention to or obeyed).

This inert sentence is my body, but my soul is alive, dancing in the sparks of your brain.

Reading this is like eating sushi. It’s just one bite but it’s delicious and it’s a unique flavor so you want to pay attention to it. You want to pause, cleanse your mind, have a bit of a palate refresher in between them.

The reader of this sentence exists only while reading me.

Thit sentence is not self-referential because “thit” is not a word.

Fonts

Hofstadter loves fonts. Fluid Concepts and Creative Analogies goes far deeper into his work on fonts (and oddly enough was the first book purchased on Amazon) but there are some neat shorter explorations in this essay collection.

To me, reading this is fascinating because of how much deep learning has achieved in recent years. My instincts nowadays are often to be cynical of deep learning progress, thinking thoughts like “well is this really great progress if it isn’t turning into successful products”. But comparing what we have now to what people were thinking in the past makes it clear how far we have come.

The fundamental topic under discussion is how to have computers generate different fonts, and to understand the general concept that an “A” in one font looks different from an “A” in another font.

Hofstadter is really prescient when he writes in these essays that he thinks the task of recognizing letters is a critical one in AI. Performance on letter recognition was one of the first tasks that modern neural networks did well, that proved they were the right way to go. And that’s several generations of AI research away from when Hofstadter was writing!

Roughly, in the 80’s there was a lot of “Lisp-type” AI research, where many researchers thought you could decompose intelligence into symbolic logic or something like it, and tried to attack various problems that way. The initial “AI winter” was when that approach stopped getting a lot of funding. Then in the 90’s and 2000’s, statistical approaches like support vector machines or logistic regression seemed to dominate AI. The modern era of deep learning started around 2012 when AlexNet had its breakthrough performance on image recognition. Hofstadter is writing during the early 80’s, in the first era of AI, before either of the two big AI paradigm shifts.

That said, it’s interesting to see what Hofstadter misses in his predictions. Here he’s criticizing a program by Donald Knuth that took 28 parameters and outputted a font:

The worst is yet to come! Knuth’s throwaway sentence unspokenly implies that we should be able to interpolate any fraction of the way between any two arbitrary typefaces. For this to be possible, any pair of typefaces would have to share a single, grand, universal all-inclusive, ultimate set of knobs. And since all pairs of typefaces have the same set of knobs, transitivity implies that all typefaces would have to share a single, grand, universal, all-inclusive, ultimate set of knobs…. Now how can you possibly incorporate all of the previously shown typefaces into one universal schema?

Well, nowadays we know how to do it. There are plenty of neural network architectures that can both classify items into a category, and generate items from that category. So you train a neural network on fonts, and to interpolate between fonts, you grab the weights defined by each font and interpolate between them. Essentially “style transfer”.

Of course, it would be impossible to do this with a human understanding what each knob meant. 28 knobs isn’t anywhere near enough. But that’s fine. If we have enough training data, we can fit millions of parameters or billions of parameters.

It’s really hard to foresee what can change qualitatively when your quantitative ability goes from 30 to a billion.

By the way, if you like Hofstadter’s discussions of fonts and you live in the San Francisco area, you would like the Tauba Auerbach exhibit at SFMOMA.

Chaos Theory

Hofstadter writes about chaos theory and fractals, and it’s interesting to me how chaos theory has largely faded out over the subsequent decades.

The idea behind chaos theory was that many practical problems, like modeling turbulence or predicting the weather, don’t obey the same mathematics that linear systems do. So we should learn the mathematics behind chaotic systems, and then apply that mathematics to these physical cases.

For example, strange attractors. They certainly look really cool.

Chaos theory seemed popular through the 90’s - I got some popular science book on it, it was mentioned in Jurassic Park - but it doesn’t seem like it has led to many useful discoveries. I feel like the problem with chaos is that it fundamentally does not have laws that are useful for predicting the future.

Meanwhile, we are actually far better nowadays at predicting the weather and modeling turbulent airflow! The solution was not really chaos theory, though. As far as I can tell the solution to these thorny modeling problems was to get a lot more data. Weather seems really chaotic when your data set is “what was the high temperature in Chicago each day last year”. If you have the temperature of every square mile measured every 15 minutes, a piecewise linear model is a much better fit.

I think numeric linear algebra ended up being more useful. Yeah, when you predict the outcome of a system, often you get the “butterfly effect” and a small error in your input can lead to a large error in your output. But, you can measure these errors, and reduce them. Take the norm of the Jacobian of your model at a point, try to find a model where that’s small. Use numerical techniques that don’t blow up computational error. And get it all running efficiently on fast computers using as much data as possible.

There’s a similar thing happening here, where the qualitative nature of a field changed once the tools changed quantitatively by several orders of magnitude.

AI in 1983

These essays make me wonder. What should AI researchers have done in 1983? What would you do with a time machine?

It’s hard to say they should have researched neural networks. With 6 MHz computers it seems like it would have been impossible to get anything to work.

AI researchers did have some really great ideas back then. In particular, Lisp. Hofstadter writes briefly about why Lisp is so great, and the funny thing is, he hardly mentions macros at all, which nowadays I think of as the essence of Lisp. He talks about things like having an interpreter, being able to create your own data structures, having access to a “list” data structure built into the language, all things that nowadays we take for granted. But this was written before any of Java, Python, Perl, C#, JavaScript, really the bulk of the modern popular languages. There was a lot of great programming language design still to be done.

But for AI, it just wasn’t the right time. I wonder if we will look back on the modern era in a similar way. It might be that modern deep learning takes us a certain distance and “hits a wall”. As long as GPUs keep getting better, I think we’ll keep making progress, but that’s just a guess. We’ll see!

-

A Theory Of Everything

Physicists have a concept of a hypothetical “theory of everything”. There’s a Wikipedia page on it. Basically, general relativity describes how gravity works, and it is relevant for very large objects, the size of planets or stars. Quantum mechanics describes how nuclear and electromagnetic forces work, and it is relevant for smaller objects, the size of human beings or protons. And these two models don’t agree with each other. We know there is some error in our model of physics because we don’t have any way of smoothly transitioning from quantum mechanics to gravity. A “theory of everything” would be a theory that combines these two, one set of formulas that lets you derive quantum mechanics in the limit as size goes down, and lets you derive general relativity in the limit as size goes up.

It would be cool to have such a theory. But personally, I feel like this is a really narrow interpretation of “everything”.

Consider quantum mechanics. You can use our laws of quantum mechanics to get a pretty precise description of a single helium atom suspended in an empty void. In theory, the same laws apply to any system of the same size. But when you try to analyze a slightly more complicated system - say, five carbon atoms - the formulas quickly become intractable, either to solve exactly or approximately.

The point of laws of physics is to be able to model real-world systems. Different forces are relevant to different systems, so it makes sense to think of our physical theories along the dimension of “what forces do they have a formula for”. And in that dimension there is basically one hole, the gap between quantum mechanics and general relativity.

But there is a different dimension, of “how many objects are in the system”. In this dimension, we have an even larger gap. We have good laws of physics that let us analyze a small number of objects. Quantum mechanics lets us analyze a small number of basic particles, and classical mechanics lets us analyze a small number of rigid bodies. We also have pretty good laws of physics that let us analyze a very large number of identical objects. Fluid mechanics lets us analyze gases and liquids, and we can also analyze things like radio waves which are in some sense a large number of similar photons.

But in the middle, there are a lot of systems that we don’t have great mathematical laws for. Modeling things between the size of DNA or a human finger. Maybe you essentially have to be running large-scale computer simulations or other sort of numerical methods here, rather than finding a simple mathematical formula. But that’s okay, we have powerful computer systems, we can be happily using them. Perhaps, rather than expressing the most important laws of physics as brief mathematical equations, we could be expressing them as complicated but well-tested simulation software.

To me, a real “theory of everything” would be the code to a computer program where you could give it whatever data about the physical world you had. A video from an iPhone, a satellite photo, an MRI, the readings from a thermometer. The program creates a model, and answers any question you have about the physical system.

Of course we aren’t anywhere near achieving that. But that seems appropriate for a “theory of everything”. “Everything” is just a lot of things.

-

Crow Intelligence

Consider this thought experiment. If you, with your current mind, were suddenly trapped in a crow’s body, would you ever be able to convince any humans that you were intelligent? Maybe not.

Real crows, of course, have some huge disadvantages. They don’t speak English, they don’t understand English, they don’t have other crows who can teach them how to communicate with humans.

When I was a kid, we learned in school that a key difference between humans and other animals is that humans were intelligent enough to use tools, and other animals could not. That lesson was slightly out of date even in the 80’s, as some examples of chimpanzees using tools had already been discovered. In the age of cheap, portable cameras, we’ve discovered a lot more.

Crows

Personally, I’m interested in crows because there are a lot of crows in the East Bay hills. They fly around my backyard, cawing at each other, and I keep wondering if there’s some underlying code to it.

You can sink a lot of time into watching videos of crows using tools. They can carve sticks into hooks, drop rocks into a pool to raise the water level, and manipulate sticks in their beak in various ways.

How smart are crows, really? In AI we have the idea of a Turing test, where something is human level intelligent if it seems just like a human through a written channel. Crows can’t pass a Turing test, primarily because they can’t even take a Turing test. They can’t communicate in a written channel in the first place.

I feel like it should be possible to demonstrate that crows are far more intelligent than people thought, by teaching a crow to perform… some sort of intelligent action. Like Clever Hans doing arithmetic, but not a scam.

Things That Aren’t Crows

Are crows the right animal to try out intelligence-training? Wikipedia has an interesting page that claims that forebrain neuron count is the best current predictor of animal intelligence and lists off animal species.

The most interesting thing about this list to me is that humans are not the top of the list! Humans are pretty close, with 20 billion forebrain neurons, but killer whales have twice as many, 40 billion forebrain neurons.

What if killer whales are actually smarter than humans? We don’t really understand whale communication. Project CETI is working on it - to me, it seems like the key limiting factor is data gathering. It’s just incredibly time-consuming to collect whale communication because you have to go boat around looking for whales. No matter how fancy your AI techniques are, at the end of the day if you have on the order of hundreds of communications, it seems hard to extract data.

I think you really need some sort of interaction with the intelligent creatures - children don’t just quietly listen to adult speech and suddenly understand it. They start talking themselves, you use simple words communicating with them, you do things together while talking, et cetera. All of that is just really hard to do with killer whales.

So most of the species with a similar number of forebrain neurons to humans are aquatically inconvenient. Killer whale, pilot whale, another type of pilot whale, dolphin, human, blue whale, more whales, more whales, more whales, more dolphins, more whales, more whales. Then you have gorillas, orangutans, chimpanzees, and another bunch of just extremely large animals. The Hyacinth macaw might be more promising. Only three billion forebrain neurons to a human’s 20 billion, but at least they are small.

Crows Again

Checking down this list, the smartest animal that actually lives near me is the raven. Basically a big crow - I think crows are similar, Wikipedia just lists ravens but not crows. Around a billion forebrain neurons. Squirrels can get through some complicated obstacle courses and crows are maybe 20 times smarter. I wonder if there’s some sort of clever obstacle course I could set up, have crows do it, and demonstrate they are intelligent.

As I type this, the crows have started to caw outside my window. I like to think they are expressing their support….

-

Lines And Squiggles

I previously wrote about searching for signals from other planets in radio telescope data, and thought I’d write about my current thinking.

We have lots of data coming in from the Green Bank telescope, and some other folks and I have been working on the data pipeline. Rather than collecting lots of data and having grad students manually analyze it months or years after the fact, we want to have the whole analysis pipeline automated.

I think for both scientific projects and corporate ones, the best part about automating your data pipeline is not just that you save a lot of human work, it’s that making it easy to do an analysis makes it easy to do an iterative loop where you do some analysis, realize you should change a bit of how it operates, then redo the analysis, and repeat to make your analysis smarter and smarter.

Current Problems

The best way for checking radio telescope data for this sort of signal over the past ten years or so has been a “cadence” analysis. You expect a coherent signal from another planet to look like a diagonal line on the radio telescope data. But you sometimes also get squiggles that look like diagonal lines from noise. So you point the telescope in a “cadence” - toward planet A, then toward random other target B, then back to A, then to random other target C, in a total of an ABACAD pattern.

The traditional algorithm is you write something to detect how “liney” a particular area is, and then you look at the cadence to find a sequence that goes, line, not line, line, not line, line, not line. And then everyone writes lots of papers about how precisely to detect lines amidst a bunch of other noise.

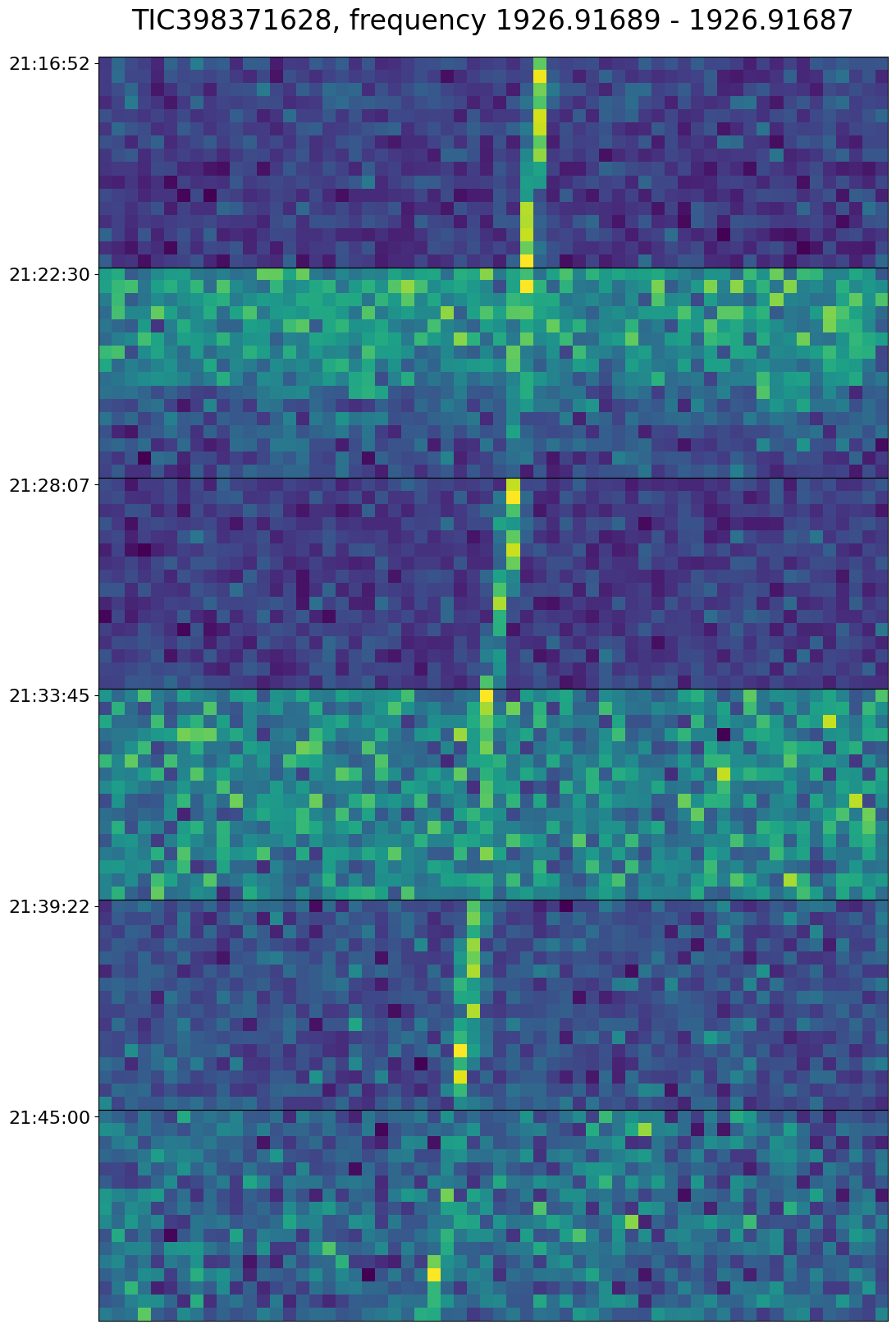

So in practice if you just set your threshold for “lininess” very high, you can get less data to look through, and so you just raise that threshold until the total output is human-manageable. I think in theory this is not quite the right thing to do - there end up being cases where you would clearly evaluate a single image as a line that are below this threshold. Lowering the threshold, though, you get false positives like this:

This is essentially a “threshold effect” - if we just categorize line versus not-line, then wherever we set the threshold, there will be some region that is noisy enough that the lines are only detectable half the time. And then in 1/64 of those cases, the coins happen to flip heads-tails-heads-tails-heads-tails and it looks like a cadence. But, humanistically, we see, oh this is really one single line that spans through all six of these images.

Maybe what we need to be doing is, rather than a two-way classification of “line” versus “not line”, we do a three-way classification of “line” versus “squiggle” versus “nothing”. And then for a cadence we really want line-nothing-line-nothing-line-nothing, maybe we would also be interested in line-nothing-squiggle-nothing-line-nothing, but line-squiggle-line-squiggle-line-squiggle is just uninteresting.

Maybe this example is more a “dot” than a “squiggle”, but I think in practice dots and squiggles are pretty similar. Both of them are things that don’t really look like lines, but also don’t really look like nothing, so it would be an unusual coincidence for one of them to occur where we expect nothing.

Perhaps I’ve lost all my readership at this point, but this is one of those blog posts where the primary value is clarifying my own thinking rather than educating you the reader per se.

Another way of thinking about this problem is, traditional methods of training a classifier don’t really make sense when your labeled data is all negatives. We need to break it down into sub-problems where we actually do have negative and positive examples. But we are breaking it down into the wrong sub-problems, because we need to be able to convert every vague sense of “we should have been able to automatically exclude this case” into an error in sub-problem classification.

To be continued…

-

Visiting SFMOMA

Near the end of Proust’s In Search Of Lost Time, the main character realizes that his own life can be the inspiration for a great work of literature. During this epiphany, Proust makes a brief digression to talk about art, and how to appreciate art you really need to pay attention not only to the art itself, but also the memories it triggers or the impression that it makes in you, the viewer.

Let me quote a few sentences.

Even where the joys of art are concerned, although we seek and value them for the sake of the impression that they give us, we contrive as quickly as possible to set aside, as being inexpressible, precisely that element in them which is the impression that we sought, and we concentrate instead upon that other ingredient in aesthetic emotion which allows us to savour its pleasure without penetrating its essence and lets us suppose that we are sharing it with other art-lovers, with whom we find it possible to converse just because, the personal root of our own impression having been suppressed, we are discussing with them a thing which is the same for them and for us. Even in those moments when we are the most disinterested spectators of nature, or of society or of love or of art itself, since every impression is double and the one half which is sheathed in the object is prolonged in ourselves by another half which we alone can know, we speedily find means to neglect this second half, which is the one on which we ought to concentrate, and to pay attention only to the first half which, as it is external and therefore cannot be intimately explored, will occasion us no fatigue. To try to perceive the little furrow which the sight of a hawthorn bush or of a church has traced in us is a task that we find too difficult. But we play a symphony over and over again, we go back repeatedly to see a church until - in that flight to get away from our own life (which we have not the courage to look at) which goes by the name of erudition - we know them, the symphony and the church, as well as and in the same fashion as the most knowledgeable connoisseur of music or archaeology. And how many art-lovers stop there, without extracting anything from their impression, so that they grow old useless and unsatisfied, like celibates of art!

The rest of the paragraph is pretty good, too.

Anyway, I used to think that when you looked at art you should use some sort of “evaluating art” skill, the way I would look at the design for a new database and think about whether it seemed like a good database design or not. And it just seemed like I lacked this skill, or found the development of this skill uninteresting. After reading this book I was curious to try out this “Proustian” way to appreciate art, where instead of wondering whether the art was good or not, you just look at it and if it triggers any memories, you think about those memories, see what things you can remember that you haven’t thought about in years, see if the art relates to those memories and if you can dig in somehow.

So last weekend I took a trip to SFMOMA with my 9-year-old son, and I really enjoyed this exhibit of American abstract art, which I never really appreciated very much before.

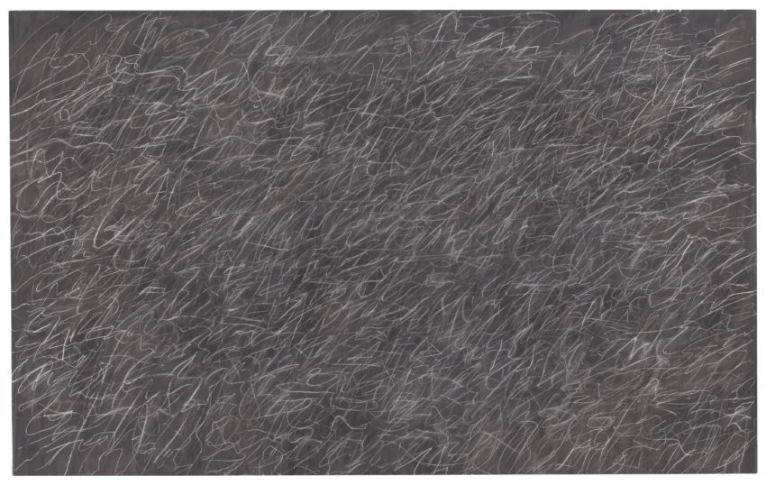

This piece reminds me of the blackboard in the first math class I took in college. The professor would get really emotionally engaged in writing things on the blackboard while talking about them, and in the process he would seem to stop paying attention to the blackboard itself. Often it would be impossible to distinguish different Greek letters, or different parts of a formula would run into each other.

Rather than completely erase the board, the professor would sort of quickly swipe an eraser and then start writing something else. The blackboard became like the catacombs beneath Mexico City, new buildings constructed on top of the ruins of past generations, with the clever archaeologist able to discern layers of different lemmas and corollaries.

It’s like the subject itself, abstract algebra. Theorems about rings, laid on top of theorems about fields, on top of integers, on top of groups, all above a historical process of figuring this stuff out that resembled the eventual outcome but shuffled around and with a bunch of false starts mixed in there.

Meanwhile, my son enjoyed the sculptures that looked like the sort of armor a bug monster would wear. The only flaw of the trip was the cold and terrible pizza from the kid’s menu at the cafe. Next time, I will encourage him toward the chicken tenders.